By: Josiah Huckins - 7/15/2022

minute read

When it comes to load and performance measurement, I've repeatedly come across a troubling misunderstanding from developers, other architects and even testing teams.

Simply put, they have differences. I've seen the terms load and performance used interchangeably when communicating to teams and clients, often resulting in confusion at the least and skewed perceptions of application behavior at the worst. Understanding their differences will help you test properly, diagnose issues with your apps and improve them.

In this post, I'll define each test type, then dive into some details of performance testing and how we might automate it.

What is load testing?

Load Testing, primarily, is taking a look at how your application handles volume. It measures how the application and supporting infrastructure react to increases in the number of: users, interactions or requests. This can help you answer specific questions such as:

- Can our application support the anticipated number of simultaneous users when we launch?

- Can our application support the actual number of simultaneous users as detailed in analytics?

- Can the application support a specific number of users over time without errors or crashes?

- How does the application react to a sudden increase in usage?

- What is the rated max number of simultaneous users our application can support before errors or crashes occur?

- How does the supporting hardware react to an increase in transactions, long running application threads or network saturation?

To generate load for such a test, you need a method to easily simulate many users and/or transactions. Likely the most popular tool (at the time of this writing) is JMeter. We won't get into the finer details of setting up JMeter test scripts now, just know that it allows you to generate many simulated HTTP requests against a web application. It provides options for "simultaneous" requests (note true simultaneous requests require multiple CPUs on the load generating machine or more preferably a distributed group of load generation nodes) for concurrent measurements.

Creating and executing load testing plans is a dense subject, one which deserves it's own dedicated post. I won't get into it here.

What is performance testing?

Performance Testing is a measurement of the time it takes an application to perform its primary or secondary functions. This is a measurement of the time required for the processing of a request (input) and the generation of a response (output). If load testing measures how the application handles multiple requests or transactions, performance testing is taking a detailed look at a single one of those requests or transactions.This can help you answer these types of questions:

- How long does it take the application to modify a value?

- How long does it take the application to provide an update to the user's screen via redraw?

- How does the application respond to a dataset that's too small or too large for an input buffer?

- What time is required for the server to read an application data object from CPU registers, or from memory?

- How many threads are needed to provide output and which ones might hold up execution of others?

As can be seen, the types of measurements can vary wildy depending on what you want to examine. Unless you're offering customers SaaS, PaaS, IaaS or other service varieties, measuring the actual server performance is probably not going to be the most efficient use of your time. For most applications focus should be placed on output latency reduction and user perception.

On the subject of user perception, this is paramount when it comes to web applications. Subjective by nature, it can be hard to target acceptable ranges for real and perceived latency in response.

Thankfully, we're aided by detailed subject matter from Google, via their Web Vitals initiative. This provides guidance for a number of key metrics relevant to applications used via a browser or similar web client, including Largest Contentful Paint, Cumulative Layout Shift, and Total Blocking time. They also offer numerous tools to test each of these metrics (and many others).

Some details on web vitals tools

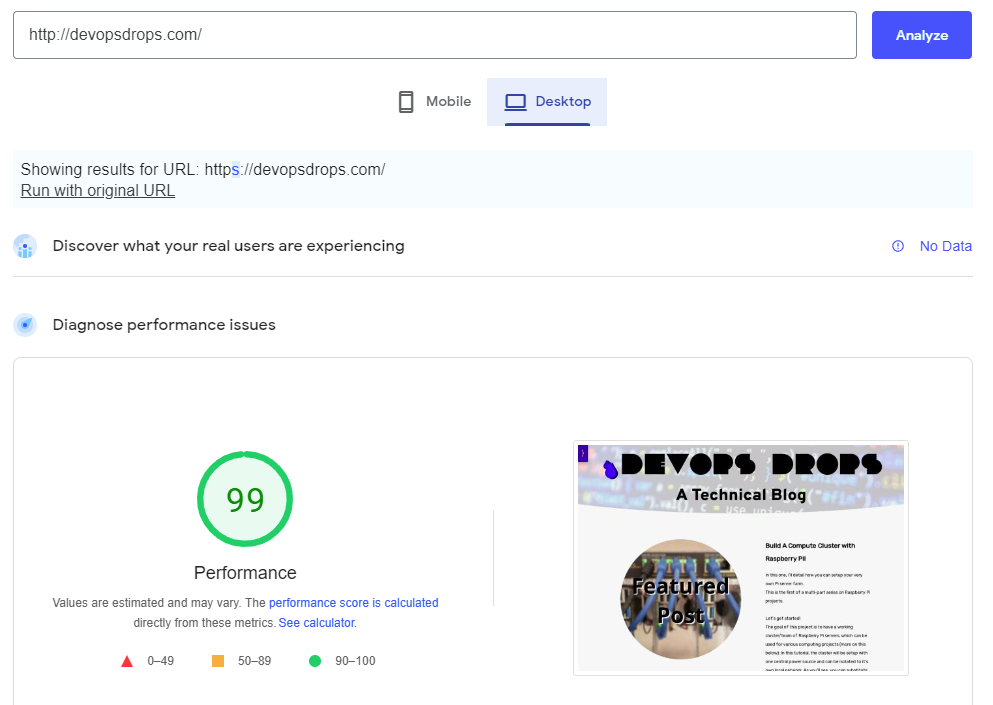

PageSpeed Insights offers a quick means to measure an application's performance, and see how it stacks up to expected timing values for Web Vitals defined metrics.Here's a sample test from this site:

A score in the 90-100 range is preferable, if your score falls below this range scroll down in the report to see details around which metrics are to blame. Most helpful is the list of improvement opportunities (further down the report page), that you might consider to improve the score.

Another very similar tool is Lighthouse. This can be accessed in the developer console of Chromium based browsers, and allows you to run tests similar to those run via PageSpeed Insights. Note an important distinction between these two tools, PageSpeed Insights is providing real performance data from usage in the wild, whereas Lighthouse is providing lab data in a simulated setup. Each has their place in your app performance measurements.

Often one of the goals of performance testing is to integrate it into your SDLC process, so performance is always tested with new code releases and regressions are discovered early. This is where Lighthouse shines, allowing you to simulate performance for changes made to your app, before real users see them.

Lighthouse integration with SDLC processes

Lighthouse is also available as an npm package. This allows you to invoke Lighthouse against a URL via the provided CLI.This can easily be scripted, taking a list of URLs as input, with each tested in sequence.

The results can be provided as JSON, which allows easy processing of multiple test results. This is helpful if you need to setup gating in your release pipelines, stalling the next step or reverting changes if scores are below a certain threshold.

(Use caution when setting up your gates! Lighthouse scores will vary based on a number of factors. Its advised to allow at least a +/- 5 point margin of error among any test run, and set the lower threshold accordingly.)

The Test Script

The following is a simple script which runs a test using a provided list of URLs (comma separated) or a provided text file. Each test result is saved in a folder as HTML and JSON. A CSV report is then generated, listing LCP, TTI, TBT and the overall score for each URL (for mobile and desktop).Note, this assumes that you have NodeJS and the Lighthouse CLI installed, as well as shell commands jq, tr and chromium-browser installed/available to Bash.

This script could be added as a post deploy step in your release pipeline.

For doing this in Azure Dev Ops, check out Shell Script tasks.

For AWS, check out AWS Shell Script tasks.

Lighthouse Testing Service

Invoking this script directly is great, but we can also set up our own "web" service to call the script from any HTTP client.Since the instance hosting the script should already have NodeJS installed, we can build our own HTTP server using Node. This server listens for requests on a port we define, and invokes the Lighthouse script. We can set it up so that, depending on parameters, the server responds with a single test result or stores them for later processing.

As with any web based service, there are some infrastructure considerations. If this is to be used in your network only, you could get away with just requesting the server's IP, with your configured port. Though, it's more preferable to setup DNS records, pointing a domain at the server and allowing use of a certificate and HTTPs.

For my example, let's assume I've configured a record on devopsdrops.com to point host "lh" at my Lighthouse testing server. In my Node HTTP service, I've configured it to listen on port 99 (more on this in a minute). With that, we can call the service at: http://lh.devopsdrops.com:99

Now, on to the javascript!

This uses the NodeJS http module to listen for requests, and executes the Lighthouse-Tester.sh script using the child_process module.

Note some key details from above. The host is set to 0.0.0.0, this allows our HTTP service to listen on any network interface. The port as mentioned above is set to 99:

const host = '0.0.0.0';

const port = '99';

Our server is set to listen for requests via:

server.listen(port, host, () => {

console.log(`LightHouse Test Service is running on ${host}:${port}`);

});

You may also notice that there are some required URL parameters. These parameters tell our service how we want to run a test. If we want to run it against a single URL and see the results in our client for example, we need to provide the "testType" as single and then a single URL to test. We can also optionally set our desired reportType for desktop or mobile and desired format.

Going back to the sample http://lh.devopsdrops.com:99 endpoint, we could run a test against my site via:

http://lh.devopsdrops.com:99?testType=single&urls=devopsdrops.com&reportType=mobile&format=json

This basic service could be enhanced to support other test scenarios such as pulling url lists from a sitemap or database. If you run releases using on-prem tools or your own hosted orchestration server, this may be a more appropriate way to isolate your test environment from the build environment. The build server could invoke your HTTP service via a Java HTTP client or tried and true cURL.